Tech giants tighten digital safety measures to protect minors online

Apple and Google are stepping up efforts to limit access to nude images by minors, introducing new age-verification and content safety systems across their platforms. The move comes amid growing global pressure on tech companies to do more to protect children in digital spaces.

Why Child Online Safety Has Become a Global Priority

For years, lawmakers, parents, and child-safety groups have raised concerns about how easily explicit content can reach underage users. With smartphones becoming more accessible to children, regulators worldwide are demanding stricter safeguards from technology companies.

Both Apple and Google have faced criticism in the past for not acting fast enough. These new measures signal a shift toward proactive responsibility rather than reactive fixes.

What Apple and Google Are Changing Right Now

According to reports, the two tech giants are rolling out systems designed to detect and restrict nude images for accounts identified as belonging to minors.

Key elements of the update include:

- Age-verification mechanisms to identify child and teen accounts

- Automatic detection of explicit images before they are viewed or shared

- Warning prompts discouraging minors from opening or sending nude content

- Parental notification options for younger users in family-linked accounts

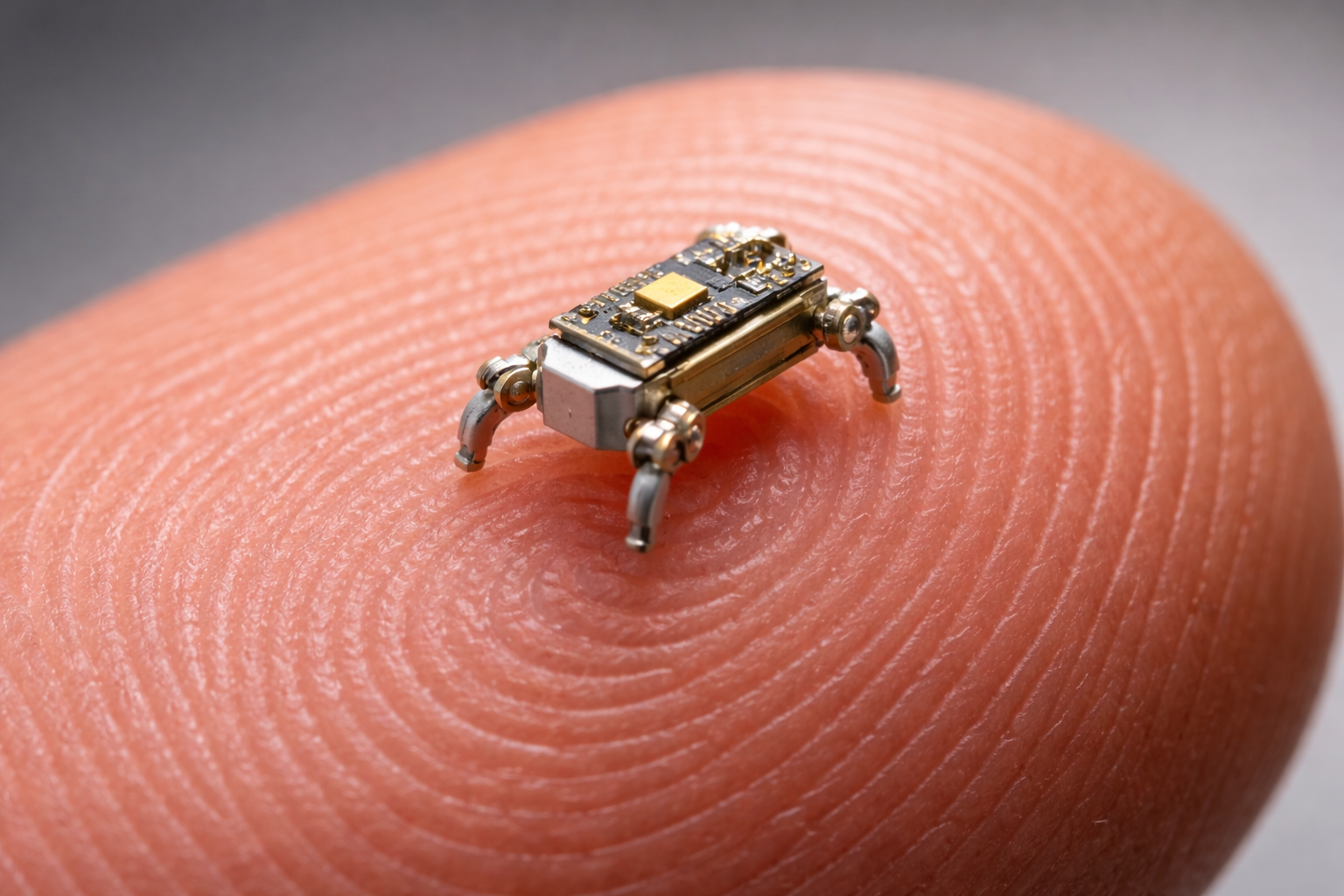

Apple’s system emphasizes on-device processing, meaning image analysis happens privately on the phone rather than on company servers. Google is expanding similar protections across Android and Google Messages.

Balancing Safety and Privacy

Digital privacy experts note that while child protection is essential, it must not come at the cost of user surveillance. Apple, in particular, has stressed that its technology does not give the company access to personal photos.

Industry analysts say this approach could become a model for future regulation where safety tools operate locally on devices, reducing privacy risks while still offering meaningful protection.

Why This Matters for Users and Parents

For parents, these changes offer an extra layer of reassurance in an increasingly complex digital world. While no system is foolproof, built-in safeguards can help prevent accidental exposure and risky behavior.

For users, especially teenagers, the update reflects a broader shift toward responsible tech design, where platforms actively discourage harmful content rather than silently allowing it.

Could More Platforms Follow?

As governments continue pushing for stricter online child-safety laws, other tech companies may soon face similar expectations. Social media platforms, messaging apps, and app stores could be next in line to introduce stronger age-based content controls.

Future updates may also include clearer global standards for age verification, something regulators have long been debating.

Conclusion: A Significant Step Toward Safer Digital Spaces

Apple and Google’s decision to block nude images for minors using age-verification tools marks an important moment in online safety. While challenges remain, the move shows that major tech companies are beginning to take a more active role in protecting younger users without fully compromising privacy.

As digital life continues to evolve, this balance between safety and personal freedom will remain at the center of the tech policy conversation.