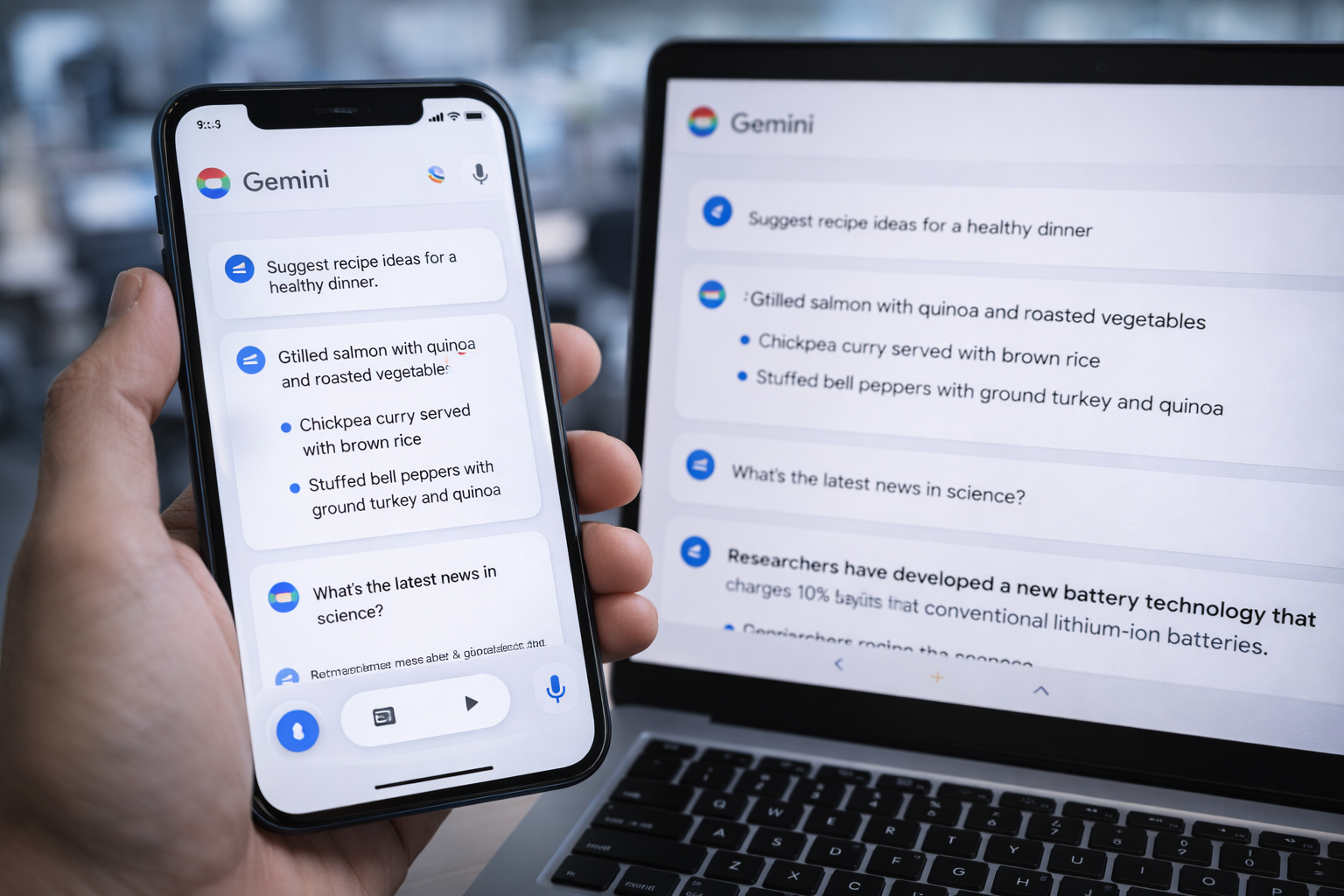

Google upgrades its AI lineup with a faster, smarter Gemini experience

Google has unveiled Gemini 3 Flash, the latest iteration of its AI model family, and has made it the default model in the Gemini app and AI Mode in Search. This move marks a major step in bringing advanced, multimodal AI capabilities to everyday users and developers alike.

Gemini’s evolution in the AI landscape

Google’s Gemini series competes directly with other leading AI models in the market, such as OpenAI’s GPT-5.2. Following the launch of Gemini 3 Pro last month, the company has expanded the lineup to include Flash a model designed to balance performance, speed, and cost-efficiency.

Gemini models have progressively improved multimodal capabilities meaning they can process and reason with text, images, audio, and video making them particularly versatile for a wide range of tasks. The introduction of Gemini 3 Flash continues this trend by offering next-generation reasoning and efficiency.

What’s new with Gemini 3 Flash

With today’s announcement, Google is rolling out Gemini 3 Flash globally and setting it as the default model in both the Gemini app and AI Mode in Search. This change replaces the previous Gemini 2.5 Flash model and delivers a noticeable upgrade in speed and capability.

Key features of Gemini 3 Flash include:

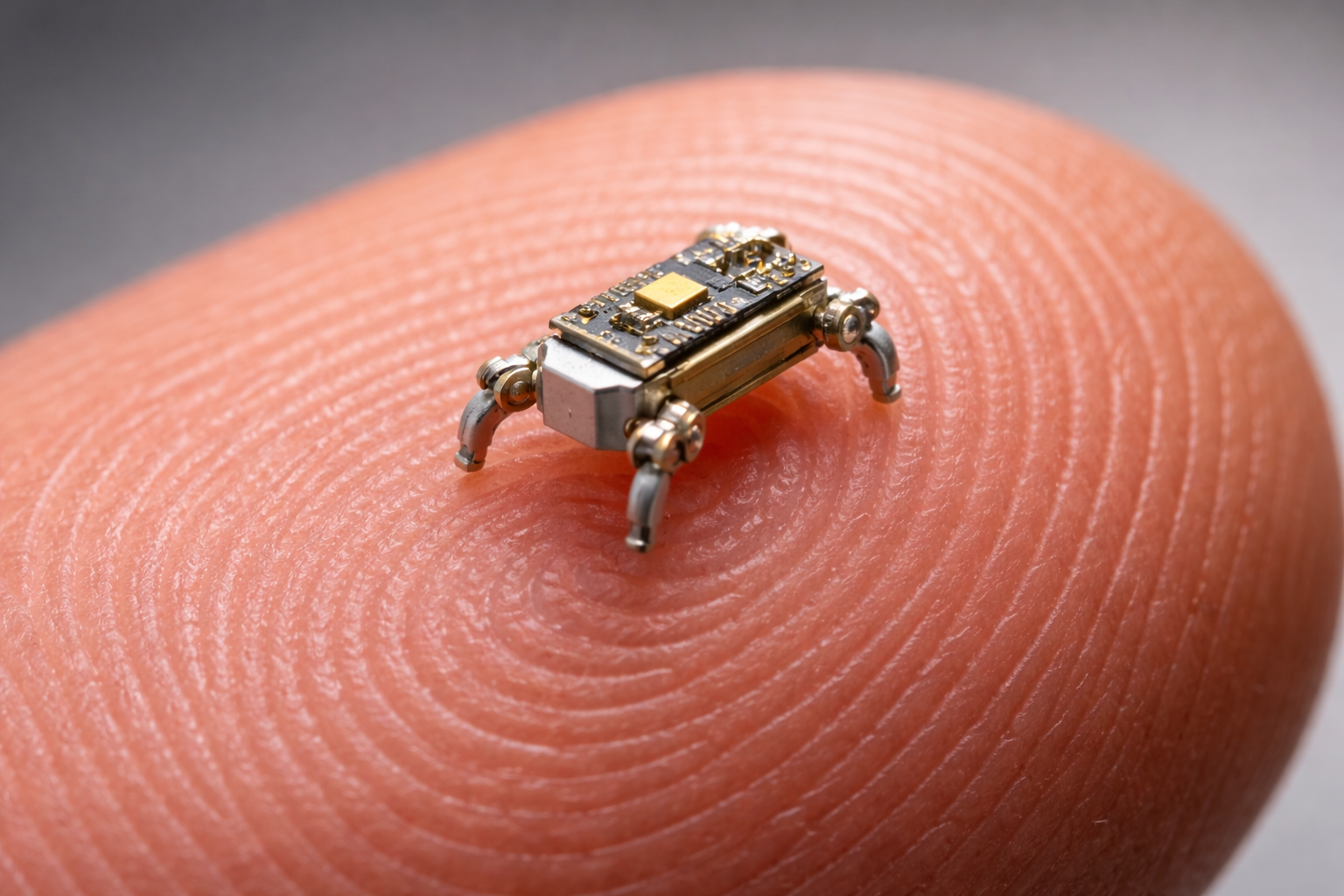

- Fast performance at lower cost Designed for speed, Flash offers front-line AI reasoning with lower latency and token usage compared to earlier models.

- Strong reasoning and multimodal skills The model excels in understanding complex queries across text, images, audio, and video.

- Default for everyday use By making it the default option, most users will interact with Gemini 3 Flash without needing to switch models.

- Developer access worldwide The Flash model is available via platforms like Gemini API, Google AI Studio, Vertex AI, Antigravity, and Gemini CLI, enabling broader integration into apps.

Users can still choose Gemini 3 Pro through the model picker for tasks that require deeper reasoning or advanced coding support.

Expert Insight: What industry voices are saying

Tech analysts view Gemini 3 Flash as a strategic move by Google to democratise access to powerful AI. By lowering costs and improving speed, the Flash model bridges the gap between high-end AI performance and everyday usability. Developers and companies integrating AI into their products can now leverage Flash for fast, reliable reasoning without incurring prohibitive costs.

One industry observer noted that “Gemini 3 Flash brings Pro-grade intelligence to a broader audience, making advanced AI features more accessible both for individual users and enterprise applications.”

AI for everyone, not just experts

The shift to make Gemini 3 Flash the default model reflects a broader trend in AI: bringing high-quality intelligence to mainstream users. Everyday tasks from visual analysis and video interpretation to intuitive understanding of user intent are now within reach for non-technical audiences.

This matters because:

- Access to advanced AI becomes more affordable Users don’t need specialised subscriptions or tools.

- Smarter search and assistance Gemini can generate richer, context-aware answers inside Google Search and the app.

- Enhanced multimodal interactions Uploading videos, sketches, or audio now yields actionable insights quickly.

Continued evolution and competition

As Google expands Gemini 3 Flash, competition with other AI leaders particularly OpenAI’s GPT-5.2 is heating up. Recent launches from OpenAI illustrate an ongoing race to deliver more powerful and usable AI models, which may accelerate innovation across the industry.

Developers can expect more updates to APIs and tooling that harness Flash’s speed, while users may soon see deeper integration across Google products such as Docs, Sheets, and Workspace.

A faster, smarter default AI for the masses

With Gemini 3 Flash now set as the default model in the Gemini app and AI Mode in Google Search, Google is placing high-performance AI into the hands of millions. The model’s blend of speed, reasoning, and multimodal capabilities promises to redefine everyday interactions with artificial intelligence making powerful tools accessible to users, creators, and developers alike.